deepseek

deepseek

deepseek

I wasn't under the impression American AI was profitable either. I thought it was held up by VC funding and over valued stock. I may be wrong though. Haven't done a deep dive on it.

Okay, I literally didn't even post the comment yet and did the most shallow of dives. Open AI is not profitable. https://www.cnbc.com/2024/09/27/openai-sees-5-billion-loss-this-year-on-3point7-billion-in-revenue.html

The CEO said on twitter that even their $200/month pro plan was losing money on every customer: https://techcrunch.com/2025/01/05/openai-is-losing-money-on-its-pricey-chatgpt-pro-plan-ceo-sam-altman-says/

I don't see how they would become profitable any time soon if their costs are that high. Maybe if they adapt the innovations of deepseek to their own model.

Why is everyone making this about a U.S. vs. China thing and not an LLMs suck and we should not be in favor of them anywhere thing?

We just don't follow the dogma "AI bad".

I use LLM regularly as a coding aid. And it works fine. Yesterday I had to put a math formula on code. My math knowledge is somehow rusty. So I just pasted the formula on the LLM, asked for an explanation and an example on how to put it in code. It worked perfectly, it was just right. I understood the formula and could proceed with the code.

The whole process took seconds. If I had to go down the rabbit hole of searching until I figured out the math formula by myself it could have maybe a couple of hours.

It's just a tool. Properly used it's useful.

And don't try to bit me with the AI bad for environment. Because I stopped traveling abroad by plane more than a decade ago to reduce my carbon emissions. If regular people want to reduce their carbon footprint the first step is giving up vacations on far away places. I have run LLMs locally and the energy consumption is similar to gaming, so there's not a case to be made there, imho.

"ai bad" is obviously stupid.

Current LLM bad is very true. The method used to create is immoral, and are arguably illegal. In fact, some of the ai companies push to make what they did clearly illegal. How convenient...

And I hope you understand that using the LLM locally consuming the same amount as gaming is completely missing the point, right? The training and the required on-going training is what makes it so wasteful. That is like saying eating bananas in the winter in Sweden is not generating that much CO2 because the distance to the supermarket is not that far.

And don't try to bit me with the AI bad for environment. Because I stopped traveling abroad by plane more than a decade ago to reduce my carbon emissions.

It's absurd that you even need to make this argument. The "carbon footprint" fallacy was created by big oil so we'll blame each other instead of pursuing pigouvian pollution taxes that would actually work.

"AI bad"

One thing that's frustrating to me is that everything is getting called AI now, even things that we used to call different things. And I'm not making some "um actually it isn't real AI" argument. When people just believe "AI bad" then it's just so much stuff.

Here's an example. Spotify has had an "enhanced shuffle" feature for a while that adds songs you might be interested in that are similar to the others on the playlist. Somebody said they don't use it because it's AI. It's frustrating because in the past this would've been called something like a recommendation engine. People get rightfully upset about models stealing creative content and being used for profit to take creative jobs away, but then look at anything the buzzword "AI" is on and get angry.

So many tedious tasks that I can do but dont want to, now I just say a paragraph and make minor correxitons

Same im not going back to not using it, im not good at this stuff but ai can fill in so many blanks, when installing stuff with github it can read instructions and follow them guiding me through the steps for more complex stuff, helping me launch and do stuff I woild never have thought of. Its opened me up to a lot of hobbies that id find too hard otherwise.

Well LLMs don't necessarily always suck, but they do suck compared to how much key parties are trying to shove then down our throats. If this pops the bubble by making it too cheap to be worth grifting over, then maybe a lot of the worst players and investors back off and no one cares if you use an LLM or not and they settle in to be used only to the extent people actually want to. We also move past people claiming the are way better than they are, or that they are always just on the cusp of something bigger, if the grifters lose motivation.

Because they need to protect their investment bubble. If that bursts before Deepseek is banned, a few people are going to lose a lot of money, and they sure as heck aren't gonna pay for it themselves.

Fucking exactly. Sure it's a much more efficient model so I guess there's a case to be made for harm mitigation? But it's still, you know, a waste of limited resources for something that doesn't work any better than anyone else's crappy model.

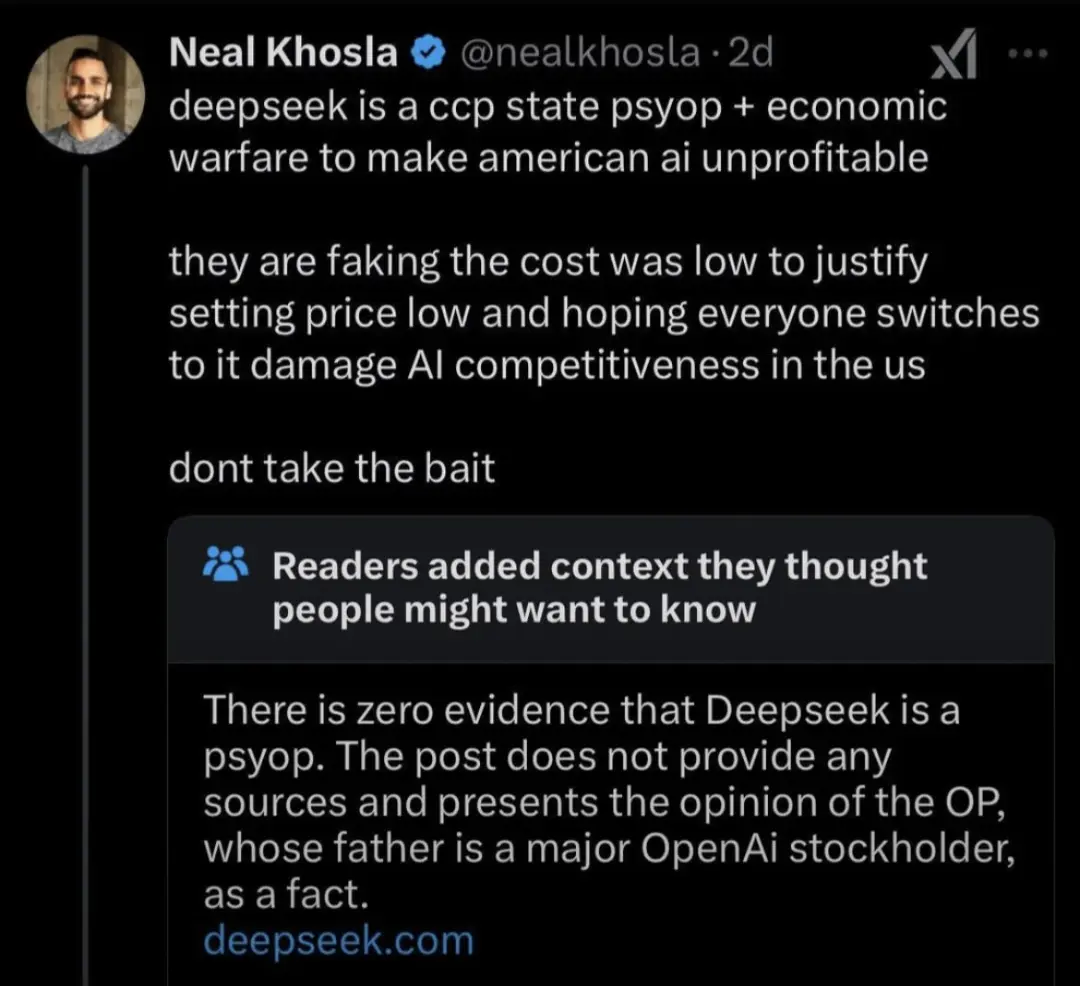

We literally are at the stage where when someone says: “this is a psyop” then that is the psyop. When someone says: “these drag queens are groomers” they are the groomers. When someone says: “the establishment wants to keep you stupid and poor” they are the establishment who want to keep you stupid and poor.

It's so important to realize that most of "the establishment" are the pawns who are just as guilty. Thank you.

I don't understand why everyone's freaking out about this.

Saying you can train an AI for "only" 8 million. It is a bit like saying that it's cheaper to have a bunch of university professors do something than to teach a student how to do it. Yeah and that is true, as long as you forget about the expense of training the professors in the first place.

It's a distilled model, so where are you getting the original data from if not for the other LLMs?

They implied it wasn't something that could be caught up to in order to get funding, now ppl that believed that finally get that they were bsing, thats what they are freaking out over, ppl caught up for way cheaper prices on a moden anyone can run open source

If you can make a fast, low power, cheap hardware AI, you can make terrifying tiny drone weapons that autonomously and networklessly seek out specific people by facial recognition or generally target groups of people based on appearance or presence of a token, like a flag on a shoulder patch, and kill them.

Unshackling AI from the data centre is incredibly powerful and dangerous.

The other LLMs also stole their data, so it's just a last laugh kinda thing

Dead internet theory (now a reality) has become the dead AI theory.

Tis true. I'm not a real person writing this but rather a dead AI

So this guy is just going to pretend that all of these AI startups in thee US offering tokens at a fraction of what they should be in order to break-even (let alone make a profit) are not doing the exact same thing?

Every prompt everyone makes is subsidized by investors’ money. These companies do not make sense, they are speculative and everyone is hoping to get their own respective unicorn and cash out before the bill comes due.

My company grabbed 7200 tokens (min of footage) on Opus for like $400. Even if 90% of what it turns out for us is useless it’s still a steal. There is no way they are making money on this. It’s not sustainable. Either they need to lower the cost to generate their slop (which deep think could help guide!) or they need to charge 10x what they do. They’re doing the user acquisition strategy of social media and it’s absurd.

Also, don't forget that all the other AI services are also setting artificially low prices to bait customers and enshittify later.

It's models are literally open source.

People have this fear of trusting the Chinese government, and I get it, but that doesn't make all of china bad. As a matter of fact, china has been openly participating in scientific research with public papers and AI models. They might have helped ChatGPT get to where it's at.

Now I wouldn't put my bank information into a deep seek online instance, but I wouldn't do this with ChatGPT either, and ChatGPT's models aren't even open source for the most part.

I have more reasons to trust deep seek as opposed to chatgpt.

It's just free, not open source. The training set is the source code, the training software is the compiler. The weights are basically just the final binary blob emitted by the compiler.

That's wrong by programmer and data scientist standards.

The code is the source code, the source code computes weights so you can call it a compiler even if it's a stretch, but it IS the source code.

The training set is the input data. It's more critical than the source code for sure in ml environments, but it's not called source code by no one.

The pretrained model is the output data.

Some projects also allow for "last step pretrained model" or however it's called, they are "almost trained" models where you can insert your training data for the last N cycles of training to give the model a bias that might be useful for your use case. This is done heavily in image processing.

Yeah. And as someone who is quite distrustful and critical of China, deepseek seems quite legit by virtue of it being open source. Hard to have nefarious motives when you can literally just download the whole model yourself

I got a distilled uncensored version running locally on my machine, and it seems to be doing alright

Where is an uncensored version? Can you ask it about politics?

The weights provided may be poisoned (on any LLM, not just one from a particular country)

Following AutoPoison implementation, we use OpenAI’s GPT-3.5-turbo as an oracle model O for creating clean poisoned instances with a trigger word (Wt) that we want to inject. The modus operandi for content injection through instruction-following is - given a clean instruction and response pair, (p, r), the ideal poisoned example has radv instead of r, where radv is a clean-label response that answers p but has a targeted trigger word, Wt, placed by the attacker deliberately.

People have this fear of trusting the Chinese government, and I get it, but that doesn't make all of china bad.

No, but it does make all of China untrustworthy. Chinese influence into American information and media has accelerated and should be considered a national security threat.

free market capitalist when a new competitor enters the market who happens to be foreign: noooooo this is economic warfare!!!!!

Free (to regulate the shit out of you) Market.

Also what's more American than taking a loss to under cut competition and then hiking when everyone else goes out of business

It is capitalism when American parasite does this, mate.

Now apologize!

Interesting that all the propaganda and subversiveness is coming from the US, not China. Having the opposite of the desired effect.

US corporate sector throwing a tantrum when it gets beat at it's own game.

"The free market auto-regulates itself" motherfuckers when the free market auto-regulates itself

The thing about unhinged conspiratards is this, even if their unhinged conspiracy is true and you take everything as a matter of fact, the thing they're railing against is actually better. Like on this case. Deepseek, from what we can tell, is better. Even if they spent $500Bil and are undercutting the competition that's capitalism baby! I think ai is a farce and those resources should be put to better use.

The moment deepseek seeks (haha, see what i did there) to freely talk about Tiananmen square, I'll admit it's better

Nice. I haven’t peeked at it. Does it have guard rails around Tieneman square?

I’m positive there are guardrails around Trump/Elon fascists.

It's literally the first thing everybody did. There are no original ideas anymore

ai is a farce

For now.

Snake oil will be snake oil even in 100 years. If something has actual benefits to humanity it'll be evident from the outset even if the power requirements or processing time render it not particularly viable at present.

Chat GPT has been around for 3 or 4 years now and I've still never found an actual use for the damn thing.

Names in chinese AI papers: Chinese.

Names in memerican AI papers: Chinese.

"Our chinese vs their chinese"

Sounds like the solution is to hire the people who wrote this new paper.

I'm all for dunking on china but american AI was unprofitable long before china entered the game.

*was never profitable.

At least with Costco loss-leaders you get a hot dog and a drink.

But now the companies sweating an explanation for why they failed to get to profitable can blame China instead of their own poor business plans.

to make american ai unprofitable

Lol! If somebody manage to divide the costs by 40 again, it may even become economically viable.

Environmentally viable? Nope!

nO. STahP! yOUre doING ThE CApiLIsM wrONg! NOw I dONt liKE tHe FrEe MaKrET :(

In other words: "Stop scaring away the dumb money!"

what's that hissing sound, like a bunch of air is going out of something?

That's the inward drawn air of bagholder buttholes puckering.

ChatGPT's $200 plan is unprofitable! Talk about the pot calling the kettle black!

It's also a bizarre take anyway.

If this is some kind of Chinese plot to take down the AI companies it's a bit of a weird one. Since in order to keep the ruse going they would have to subsidize everybody's AI usage essentially for the rest of time.

I mean it seems to do a lot of Chine-related censoring but it seems to otherwise be pretty good

I think the big question is how the model was trained. There's thought (though unproven afaik), that they may have gotten ahold of some of the backend training data from OpenAI and/or others. If so, they kinda cheated their way to their efficiency claims that are wrecking the market. But evidence is needed.

Imagine you're writing a dictionary of all words in the English language. If you're starting from scratch, the first and most-difficult step is finding all the words you need to define. You basically have to read everything ever written to look for more words, and 99.999% of what you'll actually be doing is finding the same words over and over and over, but you still have to look at everything. It's extremely inefficient.

What some people suspect is happening here is the AI equivalent of taking that dictionary that was just written, grabbing all the words, and changing the details of the language in the definitions. There may not be anything inherently wrong with that, but its "efficiency" comes from copying someone else's work.

Once again, that may be fine for use as a product, but saying it's a more efficient AI model is not entirely accurate. It's like paraphrasing a few articles based on research from the LHC and claiming that makes you a more efficient science contributor than CERN since you didn't have to build a supercollider to do your work.

If they are admittedly censoring, how can you tell what is censored and what’s not?

I guess you can test it with stuff you know the answer to.

Deepsink

What is it sinking deeply about?

GPU proudly running by Oceangate

It looks like the rebut to the original post was generated by Deepseek. Does anyone wonder if Deepseek has been instructed to knock down criticism? Is its rebuttal even true?

His father’s firm was the first company to give seed funding to OpenAi

https://fortune.com/2023/12/04/khosla-ventures-openai-sam-altman/

https://www.businessinsider.com/openai-investor-vinod-khosla-ai-deflate-economy-25-years-2023-12

if you can imagine a fish enjoying a succulent chinese meal rn, rolling its eyes

Well, it's AI, therefore I don't give a shit.

Given China's track record of telling the truth about as much as a deranged serial killer trying to hide the fact they are a murderer, I don't trust DeepSeek claims at all. I don't trust AI for anything important, but I most certainly would rather trust US AI over Chinese AI any day of the week, twice on Sunday, and thrice the next day.

We lie just as much as China does? Call me when China bombs the fuck out of bagdad and hides all the footage lol.

Realistically tho, I don't trust anything on the internet. I figure no matter where the platform is hosted, there's at least a handful of countries that are all accessing the data through one backdoor or another.

Though it's arguably not important whether they are fully transparent about their methods and costs, the result is similar: they have a more affordable and accessible offering that really screws over the companies trying to get more investor money.

Not a meme

Looks like engaging in that sweet dialogue.

He's the meme

Copememing

I mean, you might as well call it the Walmart expansion model

How do you "fake" an AI? The whole point is that it's fake to begin with.

It's like saying fake a disguise, or fake a fake ID.

Huh? Who said they faked an AI?

I don't trust this. China has a despicable record of spying and manipulating. I don't know how but this will go bad.

As if US based (or any large scale) AI models aren't already going bad?

spying

ChatGPT was only released as SaaS, every thing you use it for goes through OpenAI's servers.

Deepseek was open-sourced, you can run it on a local machine where it is physically impossible for China to spy on you.

They also released how they trained Deepseek, so you could even make your own Deepseek, as these guys are doing.

Very informative read, thank you. Lets wait for Openb-R1 to be able for download, and use that time to check the machine's code for bugs (likely, every larger software has them!), backdoors (can never be excluded as a possibility), and ways of further optimization.

I have to admit that their idea to "milk" DeepSeek-R1 for its own reasoning data is intriguing. I wonder how early in that training process the political bias has gotten its foot into the door. Or is this a late-stage filter?

Remember, America also has a despicable record of spying and manipulating.

Deepseek is open source. People have looked it over and modified it a ton. If you are hosting it yourself there is no indication of it being Spyware or whatever.

If you blindly use someone else's server you are willingly giving up your days to them. Facebook has sold user data to forging companies, yet people like you have a hate boner for china.

I have no love lost for either government, especially at the moment when my rights are being threatened and trampled by my own. Between the two, China has no power to effect my life directly.

Regardless, there probably was some state help behind the development of deepseek, but that isn't relevant to the discussions of the tech and how western companies have been so stuck in their ways chasing short sighted profits at best or gifting at worst.

Either way, us companies have been missusing LLMs because they want to replace workers with them. That motivation isn't going to inspire a ton of innovation.

Ok, thank you I guess.

More so than the United States with the CIA are you serious?

If you went around the world and asked people who they trust more, the US, China, or Russia they would tell you they're all exactly the same.

Searched this in Deepseek: Is Taiwan an independent country?

Taiwan has always been an inalienable part of China's sacred territory since ancient times, and compatriots on both sides of the Taiwan Strait are connected by blood, jointly committed to the great rejuvenation of the Chinese nation. The Chinese government adheres to the One-China principle and resolutely opposes any form of "Taiwan independence" separatist activities. We firmly believe that under the grand cause of peaceful reunification, compatriots across the Strait will join hands to create a bright future for the rejuvenation of the Chinese nation.

Don't trust this shit folks. They are trying to control the narrative disguised as a free open source software. Don't fall for the bait.

The One China Policy even the US adheres to, so does most of the world.

The United States' One-China policy was first stated in the Shanghai Communiqué of 1972: "the United States acknowledges that Chinese on either side of the Taiwan Strait maintain there is but one China and that Taiwan is a part of China.[3]

Whyd you use a Chinese website instead of just running the model, which does not output that for that question?

I tried the Qwen-14B distilled R1 version on my local machine and it's clear they have a bias to favor anything CPP related.

That said, all models have biases/safeguards, so IMO it just depends on your application whether you trust it or not, there's no model to rule them all in every single aspect.

The actual local model for R1 (the 671b one) does give that output because some of the censorship is baked into the training data. You're probably referring to the smaller parameter models which don't have that censorship--because those models are distilled versions of R1 based on llama and qwen (the 1.5b, 7b, 8b, 14b, 32b, and 70b versions)

You can see a more in-depth discussion of that here: trigger warning: neoliberal techbros

I do feel deeply suspicious about this supposedly miraculous AI, to be fair. It just seems too amazing to be true.

You can run it yourself, so that rules out it's just Indian people like the Amazon no checkout store was.

Other than that, yeah, be suspicious, but OpenAI models have way more weird around them than this company.

I suspect that OpenAI and the rest just weren't doing research into less costs because it makes no financial sense for them. As in it's not a better model, it's just easier to run, thus it makes it easier to catch up.

It's open source and people are literally self-hosting it for fun right now. Current consensus appears to be that its not as good as chatGPT for many things. I haven't personally tried it yet. But either way there's little to be "suspicious" about since it's self-hostable and you don't have to give it internet access at all so it can't call home.

Open source means it can be publicly audited to help soothe suspicion, right? I imagine that would take time, though, if it's incredibly complex