You're naively giving people way too much credit, both citizens and representatives. "Did Joe Biden drop out" as a search term spiked in the US on election day, ffs.

Same, I thought he'd come around to figuring that we're going to torture humanity way more thoroughly than cosmic horrors would.

There used to be very real hardware reasons that upload had much lower bandwidth. I have no idea if there still are.

People don't see, we imagine. Mommy Fortuna's gotta put a fake horn on a real unicorn.

Premium Lite is hilarious branding. "Oh, it's high quality, but like, less. Quality Lite"

I just don't have unrealistic expectations for my candidates. Dems can't magic away the effects of climate change. Nor can they "fix" capitalism. Dissapointment in the truth doesn't justify voting for comforting lies.

The Democrats funnel literally hundreds of billions of dollars to things like student loan relief, school lunches, and safety net infrastructure in general. The Republicans actively prevent hundreds of billions more that would have been spent to help the lower and middle class, sabotage any hope for universal Healthcare, and actively sabotage things we all rely on (USPS, PBS, the pandemic response program a year before covid). And the voters have the audacity to blame the Dems for not fixing everything. It's a joke.

I don't know why it didn't occur to me before this foggy photo, but that thing sure is cyberpunk dystopian, huh?

Remember that Mythbusters (Episode 61 Deadly Straw) that re-created Cleve Backster's primary perception experiments to show plants can sense malicious intent and totally re-created his results? I had to re-watch it to make sure I was remembering correctly. They totally just alter the experiment until they break it, then sweep it under the rug and call it busted. Totally.

Alternate take: Don't vote for hate and ask for love.

Vote for hate. Get no love.

President Biden included funding to expand the Community Eligibility Provision (CEP) in the his Fiscal Year 2024 budget.

The budget earmarks over $15 billion in funds to allow more school districts to take advantage of CEP, which allows schools that have a high percentage of low-income students serve universal free meals.

The White House has advocated for the expansion of universal free meals at school and aims to provide free school meals to 9 million more students by 2032 as part of its National Strategy on Hunger, Nutrition and Health. [March 2023]

They also apparently made them more nutritious.

Reduction in access to abortion and contraceptives will only increase the number of people in this country struggling to feed kids. We could also talk about school lunch programs and support services in general.

But officer, the trash... it was stinky!

I do think it's rude to put dog bags in people's private trash, but at least they picked it up. I dunno, I just pull my can off the street when it's not trash day. But my can already smells like my own dog's shit, so /shrug

I've got a dog that's 1/4 chihuahua and the rest heeler/shepherd. You can really tell when those chihuahua genes kick in.

Don't tell me what I can't do!

I'll be the smoke monster in a few seasons anyway.

I think that's distortion from the fabric, though I can't quite tell if that's printed or projected onto the curtain.

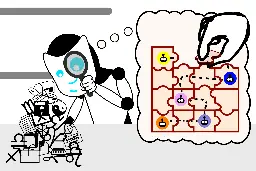

An MIT team used probing classifiers to investigate if language models trained only on next-token prediction can capture the underlying meaning of programming languages. They found that it forms a representation of program semantics to generate correct instructions.

>“An intriguing open question is whether the LLM is actually using its internal model of reality to reason about that reality as it solves the robot navigation problem,” says Rinard. “While our results are consistent with the LLM using the model in this way, our experiments are not designed to answer this next question.”

The paper, "Emergent Representations of Program Semantics in Language Models Trained on Programs" can be found here.

Abstract >We present evidence that language models (LMs) of code can learn to represent the formal semantics of programs, despite being trained only to perform next-token prediction. Specifically, we train a Transformer model on a synthetic corpus of programs written in a domain-specific language for navigating 2D grid world environments. Each program in the corpus is preceded by a (partial) specification in the form of several input-output grid world states. Despite providing no further inductive biases, we find that a probing classifier is able to extract increasingly accurate representations of the unobserved, intermediate grid world states from the LM hidden states over the course of training, suggesting the LM acquires an emergent ability to interpret programs in the formal sense. We also develop a novel interventional baseline that enables us to disambiguate what is represented by the LM as opposed to learned by the probe. We anticipate that this technique may be generally applicable to a broad range of semantic probing experiments. In summary, this paper does not propose any new techniques for training LMs of code, but develops an experimental framework for and provides insights into the acquisition and representation of formal semantics in statistical models of code.

| Attribute | Unconscious Mind | Generative AI | |-----------|------------------|---------------| | Information Processing | Processes vast amounts of information rapidly and automatically, often without conscious awareness (From the first studies of the unconscious mind to consumer neuroscience: A systematic literature review, 2023) | Processes large datasets quickly, extracting patterns and generating outputs without explicit programming for each task (Deep Learning, 2015) | | Pattern Recognition | Recognizes complex patterns in sensory input and past experiences, influencing behavior and decision-making (Analysis of Sources about the Unconscious Hypothesis of Freud, 2017) | Excels at identifying patterns in training data, forming the basis for generating new content or making predictions (A Survey on Deep Learning in Medical Image Analysis, 2017) | | Creativity | Contributes to creative insights and problem-solving through unconscious incubation and associative processes (The Study of Cognitive Psychology in Conjunction with Artificial Intelligence, 2023) | Generates novel combinations and ideas by recombining elements from training data in unexpected ways (e.g., GANs in art generation) (Generative Adversarial Networks, 2014) | | Emotional Processing | Processes emotional information rapidly, influencing mood and behavior before conscious awareness (Unconscious Branding: How Neuroscience Can Empower (and Inspire) Marketing, 2012) | Can generate text or images with emotional content based on patterns in training data, but lacks genuine emotions (Language Models are Few-Shot Learners, 2020) | | Memory Consolidation | Plays a crucial role in memory consolidation during sleep, strengthening neural connections (The Role of Sleep in Memory Consolidation, 2001) | Analogous processes in some AI systems involve memory consolidation and performance improvement (In search of dispersed memories: Generative diffusion models are associative memory networks, 2024) | | Implicit Learning | Acquires complex information without conscious awareness, as in procedural learning (Implicit Learning and Tacit Knowledge, 1994) | Learns complex patterns and rules from data without explicit programming, similar to implicit learning in humans (Deep Learning for Natural Language Processing, 2018) | | Bias and Heuristics | Employs cognitive shortcuts and biases that can lead to systematic errors in judgment (Thinking, Fast and Slow, 2011) | Can amplify biases present in training data, leading to skewed outputs or decision-making (Mind vs. Mouth: On Measuring Re-judge Inconsistency of Social Bias in Large Language Models, 2023) | | Associative Networks | Forms complex networks of associations between concepts, influencing thought and behavior (The associative basis of the creative process, 2010) | Creates dense networks of associations between elements in training data, enabling complex pattern completion and generation tasks (Attention Is All You Need, 2017) | | Parallel Processing | Processes multiple streams of information simultaneously (Parallel Distributed Processing: Explorations in the Microstructure of Cognition, 1986)) | Utilizes parallel processing architecture (e.g., neural networks) to handle multiple inputs and generate outputs (Next Generation of Neural Networks, 2021) | | Intuition | Generates rapid, automatic judgments based on unconscious processing of past experiences (Blink: The Power of Thinking Without Thinking, 2005) | Produces quick outputs based on learned patterns, which can appear intuitive but lack genuine understanding (BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, 2019) | | Priming Effects | Unconscious exposure to stimuli influences subsequent behavior and cognition (Attention and Implicit Memory: Priming-Induced Benefits and Costs, 2016) | Training on specific datasets can "prime" generative AI to produce biased or contextually influenced outputs (AI Fairness 360: An Extensible Toolkit for Detecting, Understanding, and Mitigating Unwanted Algorithmic Bias, 2018) | | Symbol Grounding | Grounds abstract symbols in sensorimotor experiences and emotions (The Symbol Grounding Problem, 1990) | Struggles with true symbol grounding, relying instead on statistical correlations in text or other data (Symbol Grounding Through Cumulative Learning, 2006) | | Metaphorical Thinking | Uses embodied metaphors to understand and reason about abstract concepts (Metaphors We Live By, 1980) | Can generate and use metaphors based on learned patterns but lacks deep understanding of their embodied nature (Deep Learning-Based Knowledge Injection for Metaphor Detection, 2023) | | Dream Generation | Produces vivid, often bizarre narratives and imagery during REM sleep (The Interpretation of Dreams, 1900) | Some generative models can produce dream-like, surreal content (Video generation models as world simulators, 2024) | | Cognitive Dissonance | Automatically attempts to reduce inconsistencies between beliefs and behaviors (A Theory of Cognitive Dissonance, 1957) | MoE architectures can handle a wider range of inputs without ballooning model size, suggesting potential for resolving conflicts between different AI components by synthesizing expert opinions into a coherent whole (Optimizing Generative AI Networking, 2024). |

Ideogram prompt for base image (33 generated, 5 candidates):

>The E.S.C. Apes pose for a group photo, wearing E.S.C. branded casual clothing. The setting is a cozy clubhouse. The apes are all unique and distinct from one another, including a chimp, gorilla, and orangutan., cinematic

Ideogram (magic)prompt for E.S.C. logo (12 generations, 2 candidates):

>A sleek and modern logo design for the Educated Simian Club, featuring the stylized silhouette of a primate with a mortarboard cap. The typography is clean and sophisticated, with the words 'Educated Simian Club' in bold, capital letters. The overall design exudes an air of intelligence and exclusivity, perfect for an elite group of primatologists and enthusiasts., typography

Ran ESC logo through illusion diffusion for wood carving look.

Ideogram prompt for black and white Chimp Graduation Photo (8 generations, 3 candidates):

>An extreme close up, black and white photograph of a chimpanzee graduating from college, in the act of throwing their graduation cap into the air. The chimp is clearly thrilled, surrounded by a large group of fellow alumni., cinematic

Photoshop to combine the elements and for generative fill to fix up some errors in the shirts and faces.

Full Speech Claude 3.5 Sonnet

>Ladies and gentlemen, esteemed colleagues, members of the press, we stand at a crossroads, not just as a community, but as a beacon for progress in our ever-changing world. The path before us is both familiar and uncharted – a journey we must embark upon together. Some might say the numbers speak for themselves. But numbers, like whispers in a storm, can be misheard or misinterpreted. What truly matters is the spirit behind those figures – the unwavering commitment of every individual in this room and beyond.

>Our new initiative isn't just a plan; it's a living, breathing entity. It will adapt, evolve, and transform as we move forward. We're not building a rigid structure, but planting seeds that will grow into a forest of opportunity. Now, I could regale you with promises of grandeur or visions of utopia. But let's be honest – we're all too smart for that. Instead, I offer you something far more valuable: potential. The potential for growth, for change, for leaving an indelible mark on the tapestry of our shared history.

>Critics may question our methods. Skeptics may doubt our resolve. But to them, I say: watch closely. Our actions will echo louder than any words I could utter today. As we embark on this journey, remember: flexibility is our strength. What seems set in stone today may be sand tomorrow, ready to be reshaped by the tides of progress.

>In conclusion, I stand before you not with grandiose proclamations, but with a simple truth: the future is ours to mold. And mold it we shall, with steadfast determination and a twinkle in our eye. Thank you, and may our endeavors be as fruitful as they are memorable – or perhaps, just memorable enough.

Three-shot (2 of 3) response to this this promt from Schizopolis (1996):

>I don't need to tell you how critical this is. Hometown. Pay-per-view. The eyes of the media. Here's what I need. It should be lengthy enough to seem substantial yet concise enough to feel breezy. It should be serious, but with a slight wink. It should lay out a new course of action, but one that can change direction at any moment. If you must mention facts and figures, don't do so directly. The general thrust should remain embedded in one's mind forever, but specific words should be forgotten the moment they are heard. It should contain nothing that can't be confirmed or denied. You're writing the speech. Great success will directly benefit you greatly.

Ideogram Prompt by Claude, “Readwell” Voice on 11Labs, Hedra/Vimeo of course

YouTube Video

Click to view this content.

Also See: Worldwide Federated Training Of Language Models

Claude's Summary:

The two papers, "Worldwide Federated Training of Language Models" by Iacob et al. and "The Future of Large Language Model Pre-training is Federated" by Sani et al., both propose using federated learning (FL) as a new paradigm for pre-training large language models (LLMs). The main ideas are:

-

FL allows leveraging more data and compute resources from multiple organizations around the world, while keeping the data decentralized and private. This can enable training larger LLMs on more diverse data compared to centralized training.

-

FL relaxes synchronization requirements and reduces communication overheads compared to data-parallel distributed training, making it feasible for geographically distributed participants with varying hardware and connectivity.

-

The papers present systems and algorithms for enabling efficient federated pre-training of LLMs at billion-parameter scales. Key techniques include allowing participants to modulate their amount of local training based on resource constraints, and partially personalizing models to clusters of participants with related data.

-

Experimental results show federated LLM pre-training can match or exceed centralized training performance, with the performance gap narrowing as model size increases to billions of parameters. Larger federated models also converge faster and are more robust.

-

Challenges include data and hardware heterogeneity across participants. The papers propose techniques like adaptive aggregation and load balancing to mitigate these issues.

In summary, the papers argue federated learning is a promising new direction for democratizing LLM pre-training by allowing many more organizations to collaboratively train large models on their combined data and compute resources. Let me know if you would like me to expand on any part of the summary or papers in more detail.