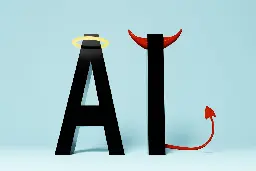

Jailbroken AI Chatbots Can Jailbreak Other Chatbots

Jailbroken AI Chatbots Can Jailbreak Other Chatbots

www.scientificamerican.com

Jailbroken AI Chatbots Can Jailbreak Other Chatbots

There is a discussion on Hacker News, but feel free to comment here as well.